A Path Towards Generative UI

Imagine if your app’s interface could literally design itself on the fly. Instead of fixed screens and layouts, the UI adapts in real time to what the user needs—guided by conversation context and structured data, powered by a large language model (LLM). This is the vision behind generative UI: letting an AI “go beyond text and generate UI,” creating a more engaging, AI-native user experience. In a generative UI, the LLM isn’t just chatting; it’s also deciding how to present information (e.g., as a chart, form, or map) and even which interactive controls to show next.

Why is this powerful? Traditional chatbots rely on text or pre-defined buttons, but text isn’t always the best interface. As one developer described Google’s next-gen Gemini AI:

“Gemini can use its brains to decide if it would be better to use a more productive UI than text. When it decides to do this it uses Flutter to create the UI.”

In other words, if showing a custom widget or screen helps the user more than a wordy answer, the AI can generate that UI on the spot. Flutter—with its flexible, declarative UI framework—serves as an ideal canvas for this kind of dynamic interface.

The Generative UI Experience: Data + Conversation = UI

In an ideal generative UI experience, user interfaces are composed dynamically based on structured data and the ongoing conversation. The LLM acts like a savvy UX designer who understands the user’s intent from the chat history and has access to the user’s data or query results. It then picks or assembles UI components that best present that information in context.

For example, consider a travel assistant scenario. A user might ask, “I’m looking for warm travel destinations in Europe.” Instead of replying with a long text list, an LLM-powered UI could display a set of interactive options—buttons or cards for each destination—directly in the chat. The user can tap one of these choices instead of typing a response.

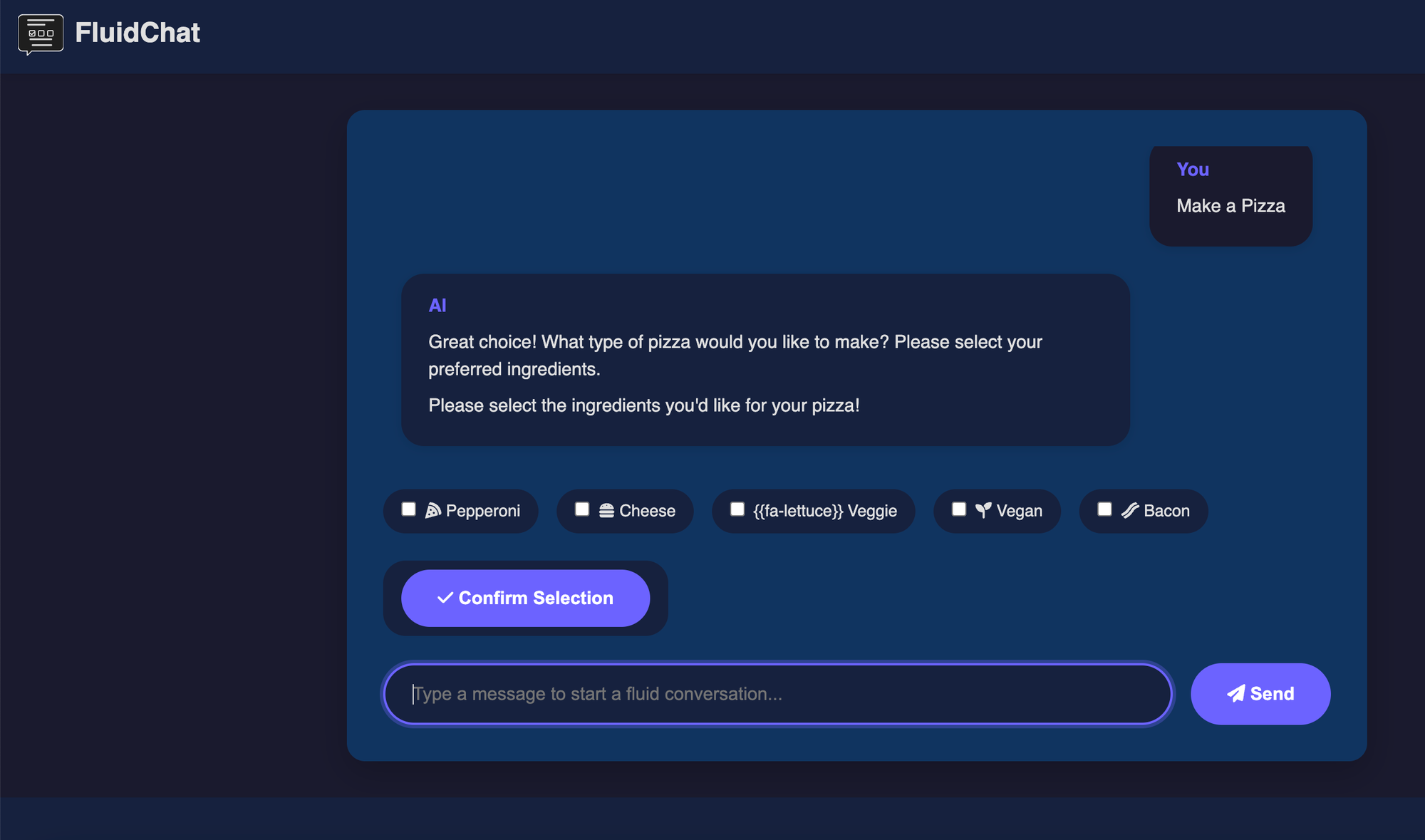

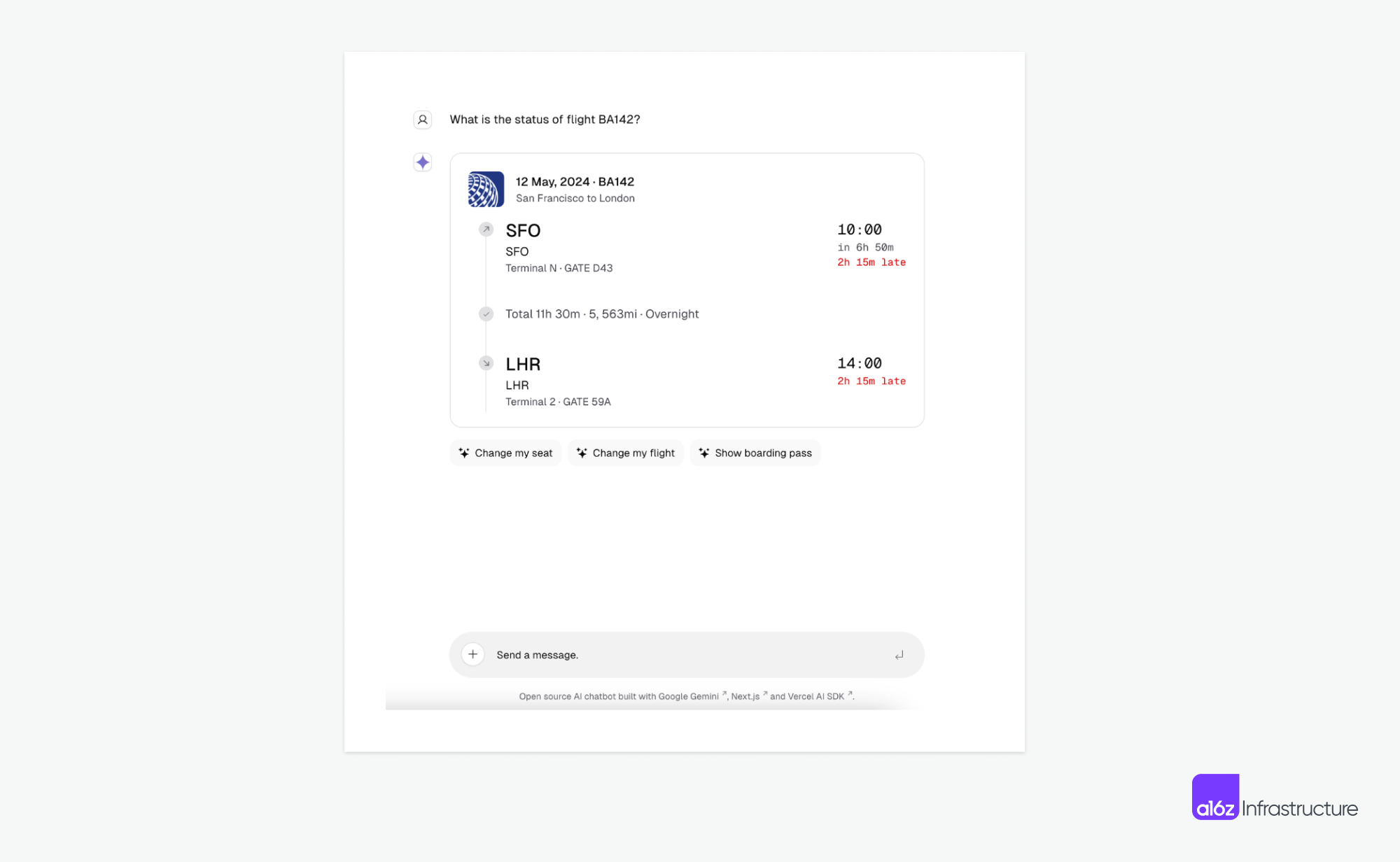

Another scenario: an AI assistant in a flight booking app. Suppose you ask, “What is the status of flight BA142?” A generative UI could not only answer with text but also show a stylized flight status card (with a flight timeline, departure/arrival details, and delay notifications) and action buttons like “Change my seat” or “Show boarding pass”.

Figure 1: An example of a generative UI in a chat assistant. The LLM presents options (e.g., “Barcelona, Spain”, “Lisbon, Portugal”) as clickable buttons rather than just text, enhancing interactivity.

Figure 2: Example of an AI-generated flight status card (BA142). The assistant displays structured information along with interactive follow-up options, rendered via Flutter’s engine.

In this vision, the UI evolves as the conversation progresses. Early on, it might be mostly text; later, as the AI gathers more structured info, it introduces relevant widgets—a chart for finances, a map for travel directions, or a form for further details. The UI is just-in-time generated to suit the user’s needs.

UI Output Requirements: Visual Cues and Contextual Emphasis

Designing such fluid UIs requires careful thought about how to present changes and highlight context:

-

Visual Change Indicators:

New or updated content should be clearly indicated—via subtle highlights, animations, or badges (e.g., a “new” label on an updated widget). -

Contextual Emphasis:

The UI should stress what’s most important at any moment. For instance, if a long response is generated, it might be placed in a side panel to keep the primary conversation uncluttered. -

Adaptive Formatting:

Elements like key numbers or dates might be rendered in larger, bolder text or use different colors to stand out based on context.

UI Input Modalities: Beyond Keyboard and Touch

Generative UIs shine when they accept richer input modalities beyond just text:

-

Direct Manipulation (Touch & Click):

Users interact with dynamically generated buttons, sliders, or cards. Taps and gestures are captured and sent back as part of the conversation context. -

Gesture Input:

On touchscreen devices, gestures (e.g., swiping, pinching, or dragging) can filter or rearrange data. Advanced systems might even support complex gestures, further enhancing control. -

Voice Input:

A voice command can trigger both a verbal response and a dynamic UI update, making the interaction more natural. -

Continuous Inputs & Sensors:

Future systems might adjust UIs based on device orientation, location, or even biometric feedback.

The key challenge is fusion: integrating these various input types so that the LLM correctly interprets the user’s intent.

Key Technical Concepts

Data Schema

A data schema defines the structure of the information to be displayed. For instance, a weather app might use a schema like { date, temperature, condition, location }, while a contacts app might use { name, phone, email }. Understanding the schema is critical to determining which UI template fits best.

UI Template

A UI template is a blueprint for a particular layout type—such as a list, detail view, form, or chart. In a generative UI, the LLM selects and composes these templates to match the data schema, ensuring consistency and quality. Templates can be parameterized to adjust style and layout dynamically.

Declarative UI System

Frameworks like Flutter use a declarative UI approach, where the UI is a function of its current state. The LLM can output a widget tree (or structured representation) that Flutter renders directly. This paradigm simplifies updates since only the changed parts of the state need to be re-rendered.

Templates + LLM: Adapting to Any Data Schema

The goal is to leverage a fixed set of adaptable UI templates to cover a broad range of data schemas. Here, the LLM’s role is to:

-

Classify Data Patterns:

Identify if the data fits a list, detail view, form, dashboard, etc. -

Parameterize Templates:

Select a template variant (e.g., compact vs. detailed) and adjust style parameters (theme, font size, etc.). -

Schema-Driven Binding:

Map data fields to template slots. For example, aWeatherCardtemplate might require fields liketemperatureandcondition, regardless of the exact data schema. -

Fallback Options:

If data doesn’t neatly match any template, a generic fallback (such as a key-value table) can be used.

Note: Treating UI components as function calls (e.g.,

displayWeather(data)) allows the LLM to reference pre-built templates. This approach mirrors techniques seen in the Vercel AI SDK for React—adapted here for Flutter.

By using a limited set of templates, the system ensures consistency, predictability, and safety, even as the LLM dynamically composes the interface.

Conversationally Evolving the UI Templates

Generative UIs can evolve over the course of a conversation, both at the user level and the developer/system level:

-

User-Level Refinement:

A user might say, “Show that as a bar chart instead of a line chart,” prompting the AI to swap templates on the fly. -

Developer-Level Evolution:

Developers can interact with the AI to refine existing templates or propose new ones, gradually building a robust library. -

Stateful Adjustments:

The system tracks UI state (e.g., user selections or input values) to ensure that incremental changes integrate smoothly without losing context.

This iterative process allows the UI to be personalized and evolve dynamically based on both immediate user feedback and long-term usage patterns.

LLM-Generated Flutter Code: Under the Hood

There are several technical approaches to having an LLM generate Flutter-based UIs:

-

Direct Dart/Flutter Code Generation:

The LLM outputs Dart code for Flutter widgets directly, effectively acting as a coding assistant. This approach leverages Flutter’s hot reload for rapid testing but requires safeguards to ensure valid, secure code. -

Structured Representation (DSL or JSON):

Instead of raw code, the LLM can output a structured description (e.g., JSON) that a Flutter app interprets to build the UI. This method is safer and easier to validate, as the JSON describes a widget tree that the app “inflates” into actual widgets. -

Hybrid: Template Referencing:

The LLM outputs commands likeUseTemplate("WeatherCard", data), and the Flutter app maps that to a pre-defined widget. This approach limits potential errors while still providing dynamic UI generation.

Each method has trade-offs in flexibility, safety, and performance but aims to integrate LLM outputs with Flutter’s rendering engine for a seamless user experience.

Challenges and Research Opportunities

While generative UI holds great promise, several challenges remain:

-

Schema Generalization & Alignment:

Ensuring the LLM correctly maps arbitrary data schemas to a UI template is non-trivial. Techniques such as few-shot learning and detailed schema descriptions can help. -

Style Consistency and Transfer:

The generated UI must match the app’s design language. Multiple style variants and preference libraries can guide the LLM in selecting the right visual presentation. -

Multimodal and Contextual Inputs:

Integrating text, touch, voice, and sensor data requires robust fusion techniques so that the LLM accurately interprets user intent. -

Incremental Updates & UI State Tracking:

The system must update the UI smoothly—without jarring transitions or loss of user input—using techniques like UI diffing and Flutter’s state management. -

Performance and Latency:

While LLMs may introduce delays, strategies like caching, local model inference, and pre-computed responses can help ensure a responsive experience. -

Safety & Reliability:

Constraining the AI’s output via templates or DSLs reduces risks, but robust testing, validation, and fallback mechanisms are essential. -

Ethical and UX Considerations:

Users must understand when the interface is being dynamically generated. Transparency, clear feedback, and the option to override AI decisions are crucial.

Conclusion

Generative UI represents a paradigm shift in software design—where the interface adapts dynamically based on structured data and conversational context. By combining Flutter’s declarative UI framework with the creative potential of LLMs, we move toward interfaces that are not static but evolve intelligently to meet user needs.

- Data Schemas define the structure of the information.

- UI Templates offer a consistent, adaptable way to display that information.

- Declarative UI frameworks like Flutter make it possible to render these dynamic interfaces seamlessly.

Despite challenges in schema alignment, style consistency, multimodal input integration, and performance, the future is promising. Ongoing research—from projects like CrowdGenUI to experimental demos using Google’s Gemini—indicates that the dream of fully adaptive, AI-driven interfaces is becoming reality.

For developers, this means harnessing AI as both a backend tool and a co-designer of the interface. As the field advances, expect to see more dynamic, context-aware UIs that blend human creativity with AI-driven precision.

References

- Vercel AI SDK Documentation – Generative User Interfaces

- Medium (Tech Vibes) – Designing AI-Generated UI with Flutter and GPT Models

- Reddit (r/FlutterDev) – Discussion on Google Gemini using Flutter for AI-generated UIs

- HuggingFace Blog – LLM Chatbots 3.0: Merging LLMs with Dynamic UI Elements

- Jason Bejot – Designing LLM Interfaces: Claude’s Side Panels for Long Responses

- Brasch (Medium) – Context-Seeking Dynamic GUI for LLMs

- Sanoop Das (Medium) – Dynamic UI Generation in Flutter via JSON

- a16z – How Generative AI is Remaking UI/UX Design

- ArXiv – CrowdGenUI: Enhancing LLM-Based UI Generation with User Preferences

- ArXiv/CHI ’24 – GazePointAR: Multimodal VA with Gaze and Gestures + LLM

- DhiWise Blog – Declarative vs. Imperative UI in Flutter

- LinkedIn (Ashish Chaudhary) – Bespoke: LLM-Generated Just-in-Time Interfaces (Google Research)